First Operating System -- Part One

How did we go from rewiring plugboards to operating systems? A two-part history.

I was not intending to trace the history of software very much. But this particular thread shows what I consider the normal path of invention. We rarely have a case where something appears, fully-formed. Instead, we invent or discover an improvement to how things are done now, and then modify that over time, to end up in a very different place than when we started.

So it is with operating systems. No one looked at those early machines, then tried to build a piece of software to manage the hardware, juggle running multiple programs by multiple users, and keep the resources (memory, disk, etc.) separated so no one could fiddle with someone else’s work. No one could have had those thoughts at the time. They just thought of things that would make “what we have now” a bit better than “what we had before”.

There’s also the problem of pointing to something, and saying, “This is the first operating system.” Even those historians who will pick one, still point to other work going on at the time which might also be considered the first OS.

We’ll walk through this, from a time when the concept of an OS would have made no sense, and show where the need for one arose, and which one(s) were created. But, most importantly, we’ll talk about what problem those first OS’s solved. Because that problem is not one most people reading this will have experienced, or even imagined.

Before Software

The machine considered the first computer was ENIAC. As with most such machines, it was designed to be a super-fast calculator. But it was also flexible, so it wasn’t built for a single calculation, or even a single type of calculation. It’s probably hard for us to grasp the time period when “computors” were humans, and engineers carried books of logarithms with them. My life bridges the time when electronics were expensive and not very powerful, but I could buy a slide-rule and do far more complex calculations. In this time, a 4-function calculator was about 8 months of my allowance, but a slide-rule (“slipstick”) was only 2 week’s worth. Slide rules were carried in a sheath (much like a scabbard), which was hung from the belt.

ENIAC was far more complex, and capable of far more complex calculations. At the time, artillery needed “firing tables”, which were really just computations of ballistic trajectories. These tables let you take the gun’s settings and quickly look up where the shell would land. In the 1940’s, this sort of work occupied a huge number of humans, and the cost of NOT building ENIAC was measured in lives.

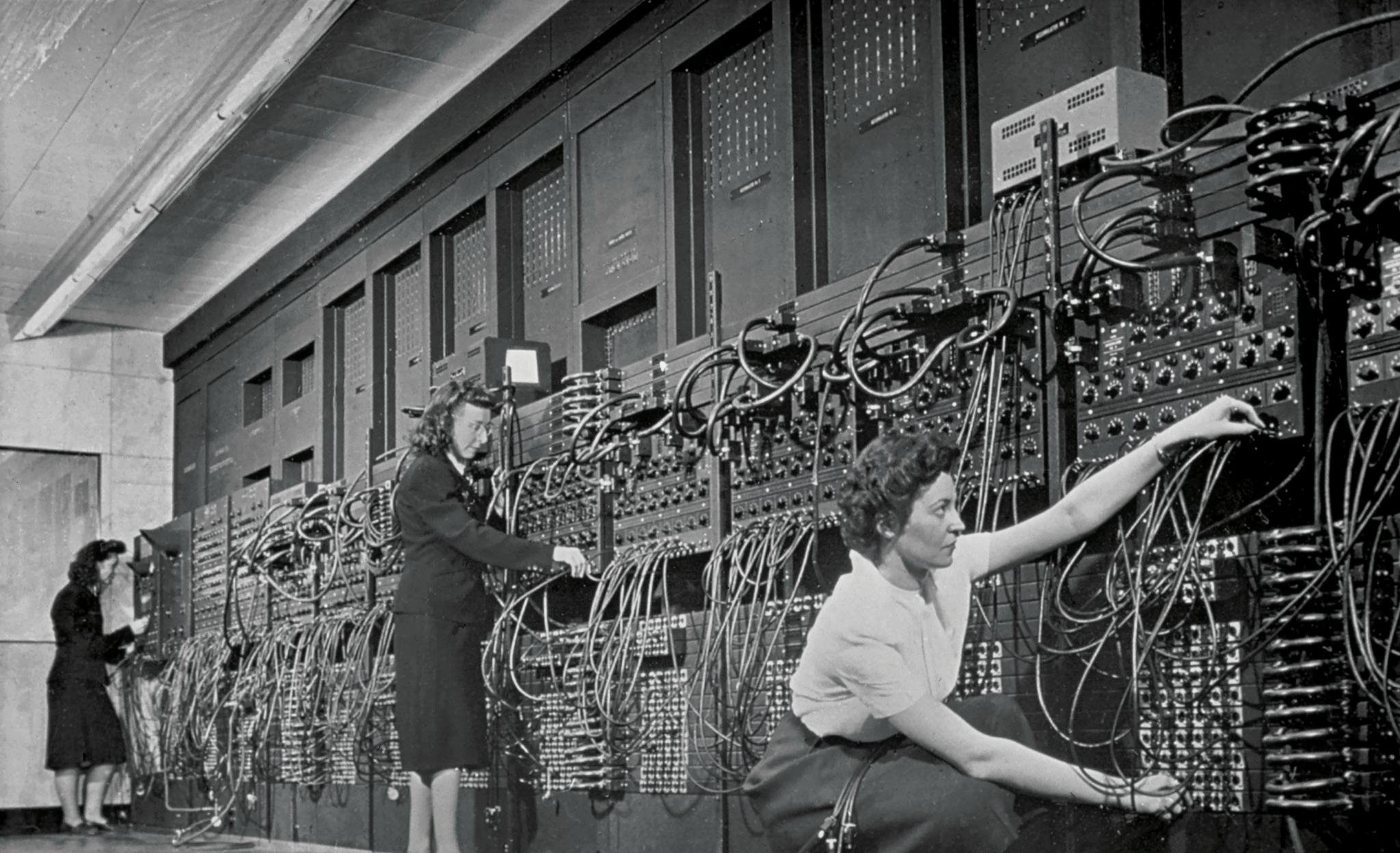

Each of those squares was a cabinet, 8 feet (2m) tall, and 3 feet (1m) deep. All together, those cabinets covered 100 linear feet (30m) of floor space. This took up a large room [300 sq. ft/28 sq. m]. It seems crazy to our eyes that a simple accumulator – just a register in modern terms – would take up a full cabinet. But that’s the era we’re talking about.

The closest thing to “programming” for this beast was a set of wiring instructions. You specialized the machine for a specific calculation by physically connecting individiual logic elements together.

At the time ENIAC was first used, total storage was 20 locations. Eventually, ENIAC was modified and even had ways to “program” it, though that seems to be more a mechanization of the wiring process than what we’d call a program today. And the machine didn’t stay running very long:

“The ENIAC is complicated. It has about 20,000 tubes and thousands of switches and plug-in contacts. Since any of these or other things may fail, it is not surprising that the duration of an average run without some failure is only a few hours. For example, a power failure (of which there were 9 in November and December, 1947) may spoil four or five tubes. Some of these failures are not clear-cut failures like a short, but borderline failures which may cause a tube to operate improperly once in a hundred to a thousand times.” 1

So I view ENIAC as something conceived along the lines of existing mechanical calculators, run by human operators. Its value was that calculations that took a room full of humans days or weeks to do, could be done in hours on ENIAC. And in wartime, that time savings was vital to the effort.

Stored Programs

Based on experience with ENIAC, and visits to other labs doing similar work, John Von Neumann developed an abstract view of the computer. He focused on the logical structure, not the physical structure. His work became the basis for the next generation of computers, known as “stored program” systems.

Put simply, this just means you use some of your storage to hold the list of instructions that the computer will follow, and the rest of your storage for the data it will operate on. This seems blindingly obvious today, but those early machines barely had a concept of storage, and what did function as storage was generally very, very small. Think of these machines as great hulking dinosaurs, with the memory of a goldfish.

I’ve talked about how this worked in my other post, so Iwon’t go into it more here. But the change to instructions in storage instead of in wiring was the next step towards an operating system.

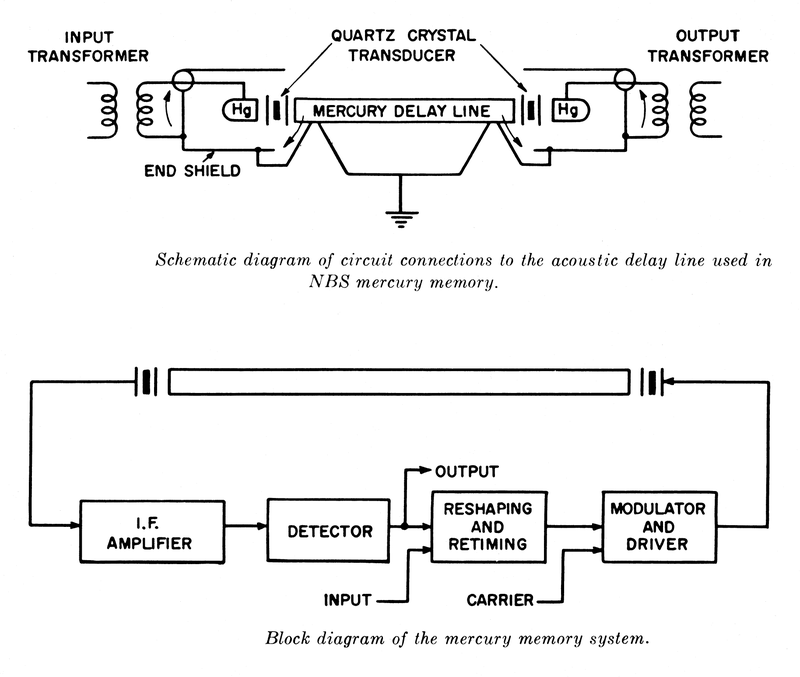

As technology improved, so did storage. Von Neumann had described a machine with two types of storage, one permanent and one temporary. We’d call this “memory” and “disk”, but back then, the devices for such things were wildly different. Permanent storage was often either a printer, or a card-puncher (see the ENIAC floorplan). Temporary storage could be done with vacuum tube logic circuits, but more often done with something like an “acoustic delay-line”.

A delay line memory was accessed serially. You had to wait for the other bits to pass by before you could read the word that you were interested in. But it was much faster than other memory devices of the time. ENIAC was fully electronic, rather than the mixture of tubes and relays previous machines had used. On a relay machine, the speed of memory wasn’t as important. But ENIAC was much faster, so the delay line’s speed helped to realize the gains of the all electronic design.

The delay-line memory was originally developed for the brand-new RADAR systems being deployed. And they existed only to “de-clutter” the radar scopes of fixed objects, by delaying the radar returns and feeding them back into the signal. Things which were not moving would cancel out, leaving just the images of moving objects (like aircraft or ships). A prime example of how things are created by re-use, rather than being invented out of nothing.

Commercial Computing

Let’s fast-forward a bit. As computers matured, and their principles tested and refined, it was only natural to apply those techniques to commerical work. Plus, the war ended, and funding became harder to get. Most of the early pioneers took a shot at starting commercial products. Vannevar Bush, who was deeply involved in wartime research, and had constructed analog computers in the late 1920’s. He started a company called Raytheon, which today is still one of the largest defense contractors to the US Goverment.

The creators of ENIAC, John Mauchly and J. Presper Eckert, formed the Eckert-Mauchly Computer Corporation. They built the first computer designed for business applications, the UNIVAC.

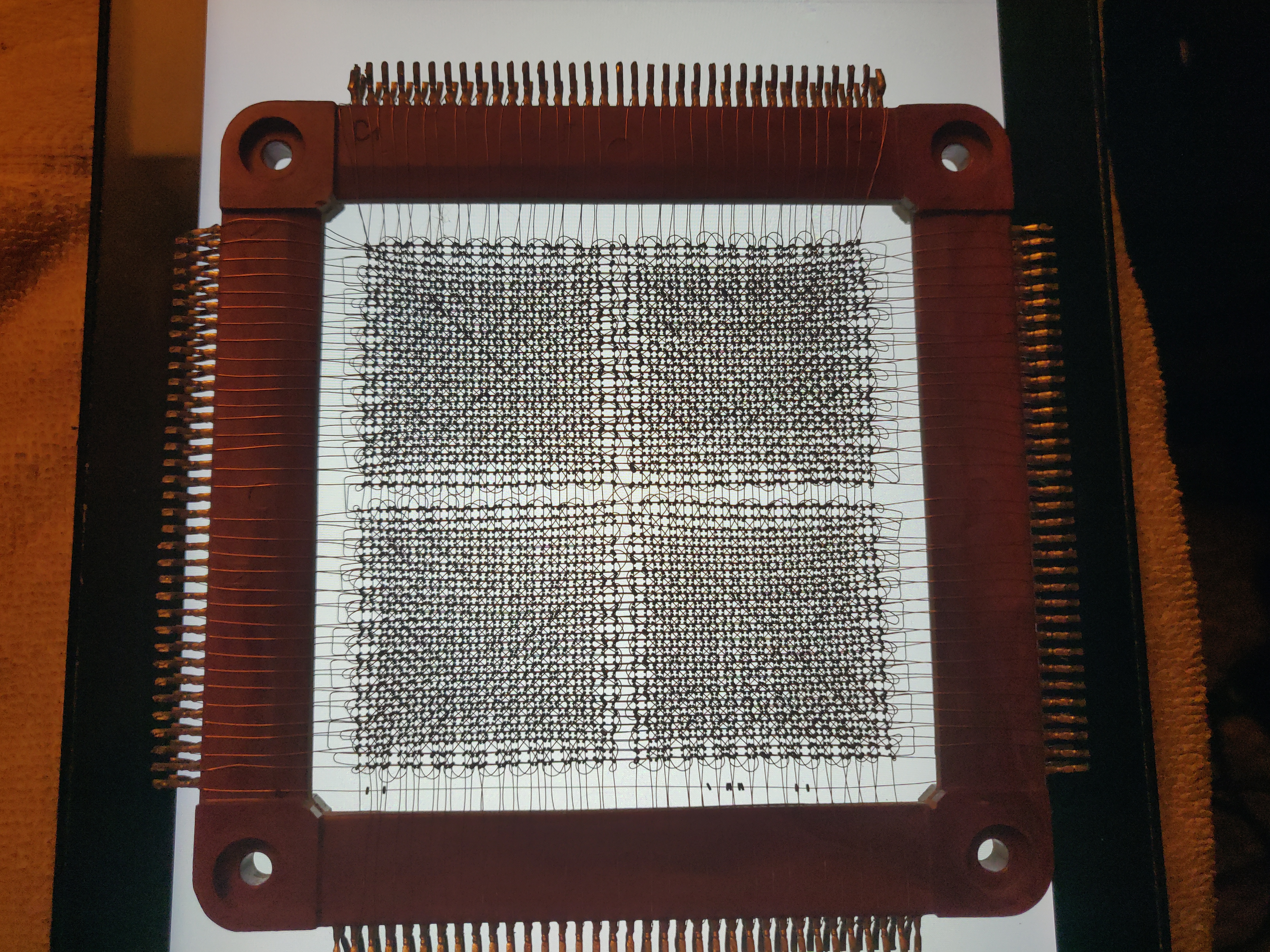

Time passes, and the business of computers became a reality. But these were still hulking beasts, requiring highly technical people to operate them. The technology had improved, and computing speed had increased. So in the place of heated mercury “tanks” with multiple acoustic delay lines, you have iron-core memory. You still had punch cards, but now you could store things on magnetic tape.

Actual programming languages appeared, starting with an “assembly language” (similar to the EDSAC “orders”, but not hand-translated to their corresponding numeric values), and ground-breaking things like COBOL and FORTRAN.

But computers were very expensive, and to make the investment pay off, you had to keep them busy 24/7. Since they could only run one program at a time, anyone wanting to run a program had to schedule time on it. And computer time was carefully tracked, so it could be charged back to the originating department as an expense. Here’s a good description of life as a computer operator at that time:

“Each user was allocated a minimum 15-minute slot, of which time he usually spent 10 minutes in setting up the equipment to do his computation … By the time he got his calculation going, he may have had only 5 minutes or less of actual computation completed – wasting two thirds of his time slot.” 2

Of note is the phrase “setting up the equipment”. At this time, there were no software abstractions for various bits of hardware. If your program was going to read data from a tape drive (perhaps the payroll data), you had to mount that tape, and connect the drive to the computer in the location your program expected to see it. And your program: if it’s a FORTRAN program, you’ll have to mount the FORTRAN compiler tape as well. Then add instructions to your “deck” of punched cards telling it to read from that tape, then feed your program (on the cards) to it, producing the executable program in memory. Then more punched cards to start that program, which reads from the data tape. And hopefully you remembered to connect the check-printing machine, so that after calculating pay and deductions, you can write the checks themselves.

Automation

In the early days, this sort of work was done by the people who had designed and built the machines. They’d also train others, who became the machine’s operators. This left engineers free to devise the calculations needed for the desired results. Because no one really wanted to spend 15 minutes setting up gear so they could get 5 minutes of actual computing done.

In the commercial world, you still had human machine operators. You’d take your payroll program down to the computer room, and hand it over to the operators. These are the people who had to do all the donkey work. Mount the tapes, configure the connections, load up the punch card deck (often several 2-foot trays of punched cards), and fire off the job. If there was an error, they had to determine if it was a machine error (e.g., tape wasn’t loaded correctly), or a logic error (your program had a bug), then gather up all your submitted materials, and all the error output, and get it back to you.

Good system adiminstrators are lazy. Anything that’s dull and repetitive is a good candidate for automation. And that was true for those early computer operators as well. I haven’t found any documentation of machine-room operations, which would describe this sort of donkey work in detail. But there are some recollections that have been written down, such as the quote above. At this point, I’m speculating a bit, but the features of the first forays into automating these machine room operations tell us the “what”, if not the “why”.

Next:

How computer operators automated the boring parts of their jobs, and took the first step towards an operating system.